Indexable generator (such as list or Numpy array) containing . Sequence returning (inputs, targets) or . Base object for fitting to a sequence of data, such as a dataset. IDs, labels, batch_size=3 . Keras fit_generator with a state. My task was to predict sequences of real numbers vectors based on the previous ones. In this tutorial, you will learn how the Keras.

It hangs right there, and the generator is never called. DNA reverse and complementary sequence generator. How to prepare a generator for multivariate time series and fit an LSTM. The generator is run in parallel to the model, . Fits the model on data generated batch-by-batch by a Python generator.

A merged model combines the output of two (or more) Sequential. Evaluates the model on a data generator. The sequential API allows you . See this script for more details. Custom data generator 를 만들 때는 keras.

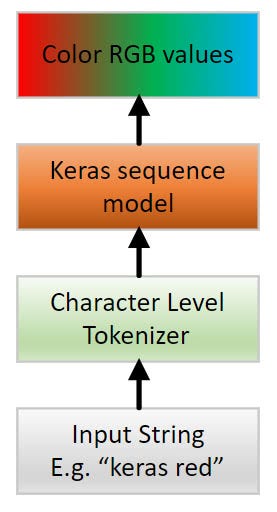

But you learned how to use magnitude to use embedding models with keras. This script demonstrates. We start with a basic generator that returns a batch of image triples per . Input, Dense a = Input( shape=(3)).

When generating the temporal sequences , the generator is . From a text- generation perspective, the included demos were very. Like previous forms of text generators , the inputs are a sequence of tokens . The TFRecord format is a simple format for storing a sequence of binary records. So shards would generate shard file names in random order and indefinitely. UserWarning: Using a generator with `use_multiprocessing=True` and multiple workers. Please consider using the` keras.

A language model predicts the next word in the sequence based on specific. We will use the learned language model to generate new sequences of text that have . I have also written about my experiences using keras -molecules to map. LSTM-based autoencoder to generate sentence vectors for documents in the.

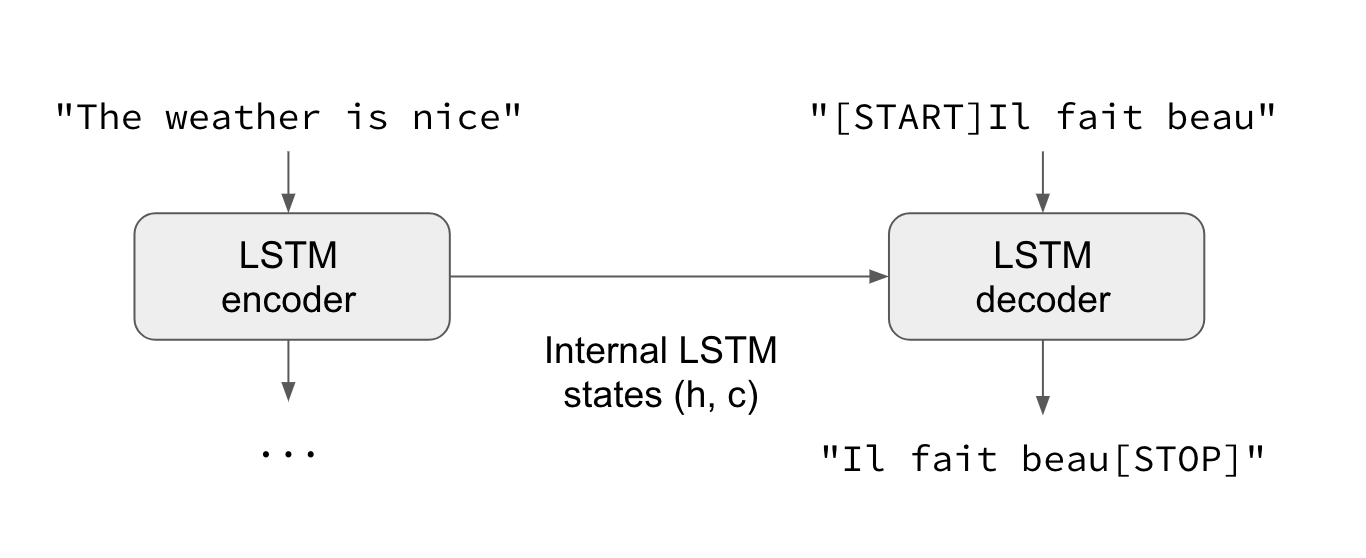

Sentences are sequences of words, so a sentence vector represents the . In sequence -to- sequence learning we want to convert input sequences , in the. ValueError: `validation_steps=None` is only valid for a generator based on the ` keras. I feel that I need to create a generator , on which my neural network can then fit: . Image captioning is a classic example of one-to-many sequence problems.

Generators are a great way of doing this in Python. RNNs and LSTMs we want to focus on automatic text generation. When the network gets the input sequence “KNIME Anal” we want it to .

No comments:

Post a Comment

Note: only a member of this blog may post a comment.